Synergistic feedback: Towards New Learning

The shape of learning is changing. In this book we’ve used terms such as ‘transformative’, ‘inclusive’, ‘reflexive’ and ‘collaborative’ to capture dimensions of contemporary educational change or ‘New Learning’. So, too, educational assessment, evaluation and research are changing. Once more, we would like to capture the spirit of change in a word, and the word we have chosen this time is ‘synergistic’.

See Synergistic Feedback Case Studies

Assessment, evaluation and research are about collecting accurate information and developing deeply considered knowledge about learners and the conditions of their learning. Traditionally, these processes were linear, giving teachers and learners end-of-program information about their progress, or public administrations end-of-year information about how effectively taxpayer dollars had been spent. They did not provide a great deal of detailed, constructive feedback at the time when it was most needed: during the learning or program implementation process. If there is one overriding characteristic of the information and knowledge management systems we would aspire to have for a New Learning, it is that they should be more useful and responsive. They should provide as-you-go information to learners, teachers and administrators so they can continuously improve. Synergistic feedback systems provide plenty of rapid, accurate and useful information. Instead of being linear, they provide many cycles of feedback, just enough and just in time.

Dimension 1: Assessment

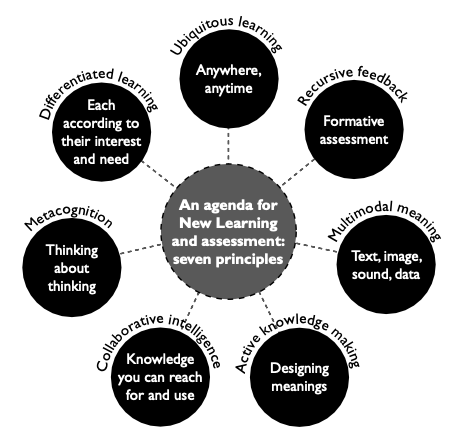

To explain what we mean by synergistic assessment, we are going to give an example that is part real, part wishful thinking. The real part is that the Year 8 student we are going to follow, Sophia, is a composite of actual students with whom we have worked during our research and development projects (Cope et al. 2011). The wishful thinking part is that, for much of the time, schools today are mostly not like this, though they could be soon. Even today, in spots here and there, some already are. We are going to analyse synergistic assessment by exploring what we consider to be its seven key principles.

Principle 1: Ubiquitous learning

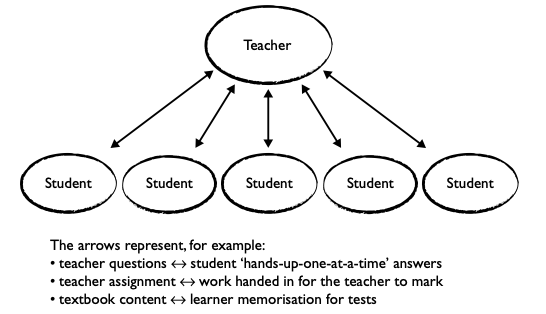

Recall the photographs of the village school in Chapter 2. The teacher sits on their little stage at the front of the class; the children all sit at desks that direct their line of sight to the teacher. In one moment, students silently direct their attention to the textbook, then write notes, all the time focusing their minds on remembering things for the examination to come. In another, the teacher speaks to the class, students dutifully putting up their hands so they can answer his questions, one at a time. The discourse structures and knowledge lows are vertical: student attention ↔ textbook; teacher questions ↔ student answers; student attention ↔ notebook; teacher assignment ↔ work handed in for the teacher to mark; textbook content ↔ learner memorisation for tests. This is the archetypical school of didactic pedagogy.

This school is always assessing in one way or another, from the right/wrong answer to the teacher’s question, or the score you get when you take the test. This classroom is a communication system, a knowledge architecture. To work, it requires that the class be constrained in space and time. On the space dimension, there are the four walls of the classroom, which always seem roughly to encompass a space of the same size, not too big and not too small for an optimum number of learners. Fewer than about ten students and the room feels empty, and more than about forty and it feels too full. On the time dimension, the knowledge of curriculum is confined in the cells of the weekly timetable, variously called social studies (8.40 am to 9.20) or mathematics (9.20 to 10.00). These cells divide and order the program of learning, week to week, chapter to chapter, test to test.

See Another Brick in the Wall.

Everything is different for Sophia. She is using a ubiquitous learning environment called ‘Scholar’. There, she and her Year 8 peers are working on a number of projects – a mathematics project on using and interpreting statistics (link), a science project on arguments for and against the case that climate change is a serious, man-made problem (jointly written with Maksim, another student in her class) (link) and an English language project exploring narrative, ‘Tell Me a Story’ (link). Different groups of students and individual students are working on different things at the same time, which is easy to arrange because each of her mathematics, science and English teachers has built a project plan for each of these tasks. These sit inside Learning Elements or pedagogical sequences that the teachers have created and published online. The content of mathematics, science or language learning may be more or less familiar, but everything else is that is going on in this class is different from the traditional discourse and knowledge relationships of learning in the classroom described above.

We’re going to spend some time with Sophia as she works on her climate change argument to see what these differences are. She is working on a tablet, but other students are on laptops, and that doesn’t matter, because Scholar is a web-enabled but device-independent ‘cloud’ application (Reese 2009). In fact, Sophia and Maksim are able to work in this space, on any project, at any time and at their own pace – not only in the classroom, but at home or in the school library or even during a field trip that the teacher has arranged to visit a climate change exhibition at their regional science museum. This is because the pedagogical designs (learning goals, content resources, instructional items and evaluation/assessment rubrics) have already been set up by the teacher for the climate change project in what is called the Learning Element, including guidelines for producing the argument writing project. Students in this learning environment have been trained to look things up on the web anywhere, anytime, carefully discerning the perspectives of different knowledge sources as they go.

‘These people must be climate change sceptics,’ Maksim says in a status update for Sophia about a website he has just found. Sophia and Maksim can do their learning work anywhere, anytime in this shared cloud space. The architecture of the learning environment they are working in is designed to let them work and communicate freely as needed without being disruptive to others in the class – Ms Homer is proud of her silently noisy class. Sophia and Maksim continue their work together on their jointly authored project even when they are not in the same time and space – in the evenings and over the weekend.

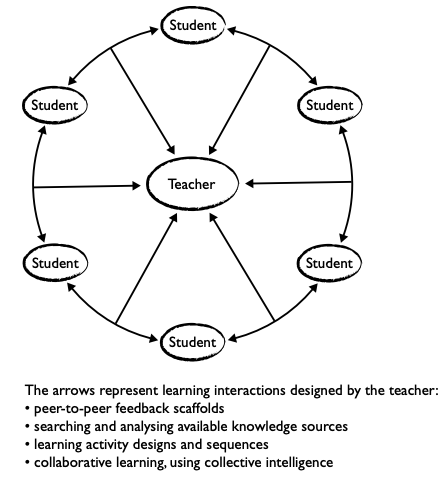

Everywhere in the world around Sophia and Maksim, social media are changing the primary axes of knowledge and meaning making from vertical to horizontal. In their everyday lives outside school, and increasingly within school too, they use devices and networks to connect with an ever larger number of different people and institutions about an increasingly larger number of interests and needs – friends, family, shopping, entertainment, news, games, gossip, information, homework, etc. The way they communicate in these spaces has expanded also. Readers are as often as not also writers; take blogs and social media updates, for instance. Expertise is being supplemented by the ‘wisdom of the crowd’ – in Wikipedia or ratings that push particular user-created videos or images or posts to prominence (Surowiecki 2004). ‘Prosumers’ co-design products; for instance, by adapting their interfaces and arranging their ‘apps’ to meet their needs and suit their interests (Cope and Kalantzis 2010). Elsewhere in this book we have called this an epochal shift in the balance of agency. Sophia’s and Maksim’s classroom has undergone the same kind of axial change.

The learning architecture they are working in, ‘Scholar’, makes the same shift in discursive and epistemic orientation for learning and for classrooms. It uses the same ‘Web 2.0’ technologies that power the new social media (O’Reilly 2005). However, given that its purposes are specific to teaching and learning, it is nuanced differently. Instead of the ‘friend’ relationships that drive Facebook or the ‘follower’ relationships that drive Twitter, Scholar sets up relationships between ‘peers’. Scholar’s focus is on peer support in knowledge making and learning. This happens using learning plans (the Learning Elements) and assessment scaffolds (the Project Plans) that have been created by Sophia and Maksim’s teachers.

There will be more on the specifics of this soon. For the moment, it is sufficient to generalise about the overall frame of reference for ubiquitous learning in formal education. We need new tools, spaces and relationships that re-orientate the lows of knowledge and learning. The vertical communications patterns and hierarchical knowledge lows of didactic pedagogy are re-oriented to become horizontal relationships of learning, scaffolded by the teacher. The conventional time- and space-bounded class- room is no longer needed as the essential architecture for formal education. The Scholar architecture becomes a place of active knowledge creation, for knowledge sharing and development supported by recursive feedback. The outcome is a learning community of constant mutual support, participatory responsibility and lateral learning.

Principle 2: Recursive feedback

The climate change Learning Element that Sophia and Maksim are doing involves a variety of activities, including viewing videos available on the web and visiting the science museum (experiencing the new), looking for websites exploring both sides of the argument (analysing critically) and concept mapping (conceptualising with theory). We have explored these pedagogical ideas already in Chapter 7. Now we are interested specifically in the processes of assessment. Sophia and Maksim have reached a culminating point in this Learning Element where they will write their own argument about climate change (applying appropriately).

Their teacher, Ms Homer, has created a project, ‘The Great Climate Change Debate: Natural or Human?’ that addresses the core curriculum standards for argumentative writing at Year 8 level. In their ‘creator’ screen within Scholar, they have two working panes: a ‘work’ pane on the left, and an ‘about the work’ pane on the right. The project starts with a notification, written into the plan by Ms Homer, to take a survey she has created in the ‘survey’ tool in the ‘about the work’ side. The students who are working on the climate change argument project read two mentor texts: one by a climate change sceptic; the other making the case that climate change is a serious problem, created by human actions. The survey questions focus on the nature of each argument: What are the claims being made to support the overall argument? What evidence is provided (facts, observations)? What concepts are being used and how are they defined? What reasoning is being used, how are implications drawn, and how does the argument draw these together into its own judgement?

The next step in the project is to draft the work. Sophia and Maksim each make two claims in support of their argument, and start to write those sections, using evidence, concepts, reasoning and implications to support their claim. Then, Sophia drafts the introductory overall argument, while Maksim writes a concluding judgement. They then work carefully over each other’s sections, making changes and suggestions as they go. All the while, they are able to see the review criteria for this work, created by the teacher in a tab on the ‘about the work’ side of the screen.

When they are finished they submit the completed draft of their work for peer review. Ms Homer’s project settings are ‘anonymous review’, with everyone under- taking two reviews. Maksim and Sophia each receive for review two texts written by other students. They rate and comment on the texts in the ‘review’ tool in the ‘about the work’ side. Here, Ms Homer has identified the main features of a very successful, quite successful and less successful scientific argument (three levels of rating for the main aspects of argumentation) and also used the annotations tool where reviewers are able to label specific parts of the text (overall argument, claim, evidence, concept, reason, implications and concluding judgement) and make specific comments. Sophia and Maksim know how important it is to give helpful feedback. In fact, they have become good at giving feedback, as reflected in the ‘help credits’ they have gained after undertaking many projects. (And by the way, this feedback-on-feedback helps Ms Homer know which peer ratings should be given more or less weight towards a final rating for the work ... this is how synergistic feedback systems work.)

Feedback is then returned to all the students according to Ms Homer’s project plan. Maksim and Sophia get some especially helpful feedback to help them revise their work, write a new draft based on this feedback and submit for final review and publication to their class ‘bookstore’ by Ms Homer. The class then enjoys reading each other’s work, making comments on the variety of information and perspectives that have come through in the class knowledge bank.

At the end of this process, Ms Homer ends up assessing the students’ science argumentation, too. She has a lot of data coming from multiple perspectives. Using the Scholar tools, these perspectives can be any or all of peer, self, parent, expert (the curator at the science museum) and other critical friend assessments, as well as teacher assessment. These perspectives can include surveys (and there is no reason why these can’t be conventional selected-response tests), reviews, annotations and natural language processing machine feedback on language use, including concept use or argument structures. The result is continuous data collection, and continuous feedback that contributes to learning. Every moment of learning has formative assessment wrapped into it. The data is moderated (weighting more reliable raters, for instance) and combined in a learner progress dashboard that Ms Homer is able to access at any time. There is no need for that strange ritual, the decontextualised test, which attempts to make knowledge inferences based on a sampling of things remembered. Now, we can assess the actual knowledge making work of students, and the data coming out is so comprehensive that summative assessment is no more than a retrospective perspective on a learner’s or a class’s work. All assessment is ‘for learning’ (formative assessment), and from this data we can draw assessment conclusions ‘of learning’ (summative assessment). The old, linear learning assessment lows are replaced by synergistic feedback lows.

Principle 3: Multimodal meaning

Scholar is being designed as a ‘web-writing’ environment with a minimalist ‘semantic editor’ toolbar, providing only informationally relevant options (such as ‘emphasis’, ‘list’ and ‘table’) rather than the cacophony of typographical options that clutter and distract in word processors. The reasons are practical: web publishing practices insist on ‘structural and semantic markup’ (Cope, Kalantzis, and Magee 2011) so works can be rendered equally effectively to different devices, from page printouts to phones. They are also pedagogical, directing learner focus on the information architecture and patterns of meaning in their texts.

Crucially for our theory, they are multimodal. Sophia can upload a small video she made on her phone of the curator speaking at the museum they visited. Maksim can upload the spreadsheet he created that underlies the statistical representation in their text. They can upload the audio of their conversation as they discussed a particular claim in their argument. Web writing is not (just) about written text – today it is about multimodal representation of knowledge.

So it is for assessment. Traditional memory tests were limited to pencil marks on the answer sheets of selected response assessments or handwritten responses to open-ended questions. Now we have a whole panoply of tools available to represent our knowledge, including the ability to show not just how well we can analyse a problem or demonstrate a disciplinary practice, but distinguish our own voice from the sources we draw upon and cite – the texts, images, videos, sound, datasets, etc. available to us on the web.

Principle 4: Active knowledge making

Sophia and Maksim are active knowledge makers. In another era, they might have memorised the content given them by teacher and textbook, and demonstrated their level of understanding by getting however many answers right to the questions in the test. Standardisation meant every student answered the same questions and the tests were closed book. Today, they have become active knowledge makers, where assessment feedback supports their knowledge making processes. Self, peer and teacher assessments all raise the same questions about the quality of their knowledge processes. How well are the claims they make as part of their scientific argument supported by well-grounded evidence, clearly defined concepts, powerful reasoning and thorough exploration of implications? What progress is made from the first draft to the completion of the second? This kind of formative assessment positions the learner as a knowledge producer, in contrast to heritage tests, which positioned the student as a memorising knowledge consumer.

Principle 5: Collaborative intelligence

Once we used to remember many phone numbers. Today the address book in our phone book remembers them for us. Once we used to have to memorise endless lists of irregular spellings. Checker programs (such as the one Maksim and Sophia have in Scholar, and the predictive text in our phones) mean that we don’t need to rely so much on memory work. This is not to say that machines relieve our need to think hard. In many ways, we have to learn the very new skills of navigation through the masses of information we have on hand all the time, and discernment of what is relevant and what is not, always with a critical eye to the interests and perspectives of the information source. This is the first dimension of collaborative intelligence supported by the Scholar teaching and learning environment: the capacity to navigate to, critically review and then, if relevant, link to the knowledge of the world and the many perspectives on that knowledge that are nowadays available online.

The second dimension is the social work of knowledge making, working collaboratively on the same text that Sophia and Maksim happen to be, or the scaffolded feedback process where other people must be credited as knowledge contributors because they have provided formative assessments that improve the quality of the final work. This is in sharp contrast to the ‘cover up your work’ of individualised memory tests or teacher-marked assignments.

A third dimension is that the knowledge work of the learners is oriented to the community of learners, not to the assessing teacher, the audience of one who does not have a lot of time to give more than a few cursory comments and an 83 (very good), 61 (OK) or 42 (not very good). Nor is it standardised, producing reliable assessment data because every student is tackling an identical assessment prompt. When their climate change project is completed, it gets published to their class ‘bookstore’ for others in the class to read. It prompts discussion in their Facebook-like ‘community’ space. It gets more comments and ratings, which add more information about the quality of the work, and might even prompt further revisions. As it transpires, the climate change projects created by the students in Sophia’s class are interestingly different. Some students have focused on the impacts on humans of rising sea levels, others on the specifics of local action that might be taken to address climate change, still others on the changes taking place in remote, frozen ecosystems. The result is not a set of scripts that are evaluated according to their sameness, but a class knowledge bank where shared knowledge is valued for the additional and complementary information that different perspectives offer.

These three dimensions add up to a phenomenon that cognitive scientists call ‘distributed cognition’. This is what Scholar’s assessment system measures – not the stuff you can remember, but the quality of the knowledge work you can do as you reach for available knowledge sources and work collaboratively appears. In fact, the shift of assessment moves away from illusive cognitive constructs, such as ‘under- standing’ or ‘intelligence’, to assessment of knowledge artefacts, the products of students’ knowledge representations. The evidence is in the knowledge representation itself. To assist this, both teacher and learner can look into their ‘dashboard’ at the mashup or synthesis of a huge amount of evaluative data that is collected incidentally to the making and sharing of the work itself. Scholar is even able to ‘see’ who spent what time on a jointly created work, and who contributed what.

See Brown et al., Distributed Expertise in the Classroom.

Of course, the other side of these environments are negative interventions by disruptive students and bullying. Scholar’s lagging system will alert Ms Homer to inappropriate or hurtful contributions. However, bullying may prove to be less prevalent than in the informal peer interaction spaces of didactic pedagogy because every interaction is recorded; it is accessible to the teacher in an audit trail; and this recorded data is the basis for clearly focused after-the-event analysis. The privacy of whistleblowing lags can be maintained, if the teacher considers this appropriate. On the other hand, the social logic of the space at every point is oriented to the reciprocity and mutuality of practical ‘help’ in the process of knowledge making.

Principle 6: Metacognition

Scholar fosters metacognition, or thinking about thinking, at many levels. It has a semantic tagging system for defining and finding content. Its ‘structure’ tool focuses the learner on the information architecture of their knowledge representation. Indeed, the general emphasis on ‘structure and semantics’ focuses the learner on thinking about their thinking processes.

However, perhaps the most significant metacognitive move Scholar makes is to share assessment responsibility with learners, in self- and peer-assessment. Pragmatically, this spreads the burden, providing Ms Homer with more data sources. But it also means that Sophia and Maksim are getting more feedback. And it’s much quicker than if they had to wait for the teacher to collect, mark and hand back their work. Also, learners are positioned metacognitively as they have to review, and write and label annotations, and undertake surveys. It requires that they explicitly consider the nature of their own knowledge and other knowledge. It creates a culture of knowledge reciprocity where learners benefit from each other’s metacognitive insights. The outlines of these have been created by the teacher in review formats, annotation label sets and surveys. But instead of these being operationalised in a vertical evaluation teacher ↔ student assessment process, they are used as the metacognitive frame of reference for student ↔ student assessment.

Principle 7: Differentiated learning

The traditional classroom of didactic pedagogy required that every learner was on the same page at the same time. Some felt lost because the work was too hard. Others became bored because it was too easy. The teacher shot for the middle of the class, hoping that the lesson was about right for most students. In the era of ubiquitous learning, an underlying organisational logic of sameness can be replaced with a new logic of difference, and without letting go of standards and well-measured learner outcomes.

In her ‘publisher’ space within Scholar, Ms Homer has some students working on different projects because they are more interested in these topics and they are a just as effective way to learn scientific argumentation. She has some students doing more challenging work, others less. Some are moving through their tasks faster than others. She asks some more advanced students to provide feedback to less advanced. Because they are working ‘in the cloud’, Ms Homer can click into any project group, and see right into any student work and where they are up to on their project plan. She can also get on-the-fly data on how well an individual student or group is doing. She can modify project plans as she goes, if that appears needed. This cacophony of complexity would be unmanageable chaos in the conventional classroom, but here it all is now, laid out neatly in front of her, with every detail just a click away. Genuinely differentiated instruction, adapted to the needs of every learner, is at last a possibility.

Many of the things Ms Homer has managed to achieve are old aspirations in education; things such as differentiated instruction, individualised projects, peer mentoring and group learning. They were always hard to do, but now we can do them, and quite easily. And because we can, perhaps we should. New media help. They make the always desirable more readily possible. Perhaps the biggest shift in this move to a New Learning are the synergistic assessment systems that make learners into teachers. Teachers build peer–peer student-feedback scaffolds, positioning learners to do assessment tasks that once only teachers did. Meanwhile, teachers become learners, adapting their teaching with the constant feedback they are getting about their learners’ progress.

Learning is the new teaching. Teaching is the new learning.

|

AFFORDANCES OF NEW TECHNOLOGY FOR ASSESSMENT FOR NEW LEARNING |

|

|

Principle 1: Ubiquitous learning |

• A change in the primary axes of knowledge making and meaning- action from vertical to horizontal • A shift in the balance of agency for knowledge making and learning • A learning community of constant mutual support, participatory responsibility and lateral learning |

|

Principle 2: Recursive feedback |

• Assessment of the knowledge making work of students • All assessment is ‘for learning’ (formative assessment), and from this data we can draw assessment conclusions ‘of learning’ (summative assessment) • Synergistic feedback flows |

|

Principle 3: Multimodal meaning |

• Tools to represent knowledge, including the ability to show not just how well we can analyse a problem or demonstrate a disciplinary practice but distinguish our own voice from the sources we draw upon and cite – the texts, images, videos, sound, datasets, etc. available to us on the web |

|

Principle 4: Active knowledge making |

• Positioning of the learner as a knowledge producer, in contrast to heritage tests, which positioned the student as a memorising knowledge consumer |

|

Principle 5: Collaborative intelligence |

• Capacity to navigate to, critically review, then, if relevant, link to the knowledge of the world and the many perspectives on that knowledge that are nowadays available online • The social work of knowledge making • The knowledge work of the learners is oriented to the community of learners • Distributed cognition |

|

Principle 6: Metacognition |

• Assessment responsibility shared with learners, in self- and peer-assessment • A culture of knowledge reciprocity |

|

Principle 7: Differentiated learning |

• Genuinely differentiated instruction, adapted to the needs of every learner • Differentiated instruction, individualised projects, peer mentoring and group learning |

Dimension 2: Evaluation

Evaluation is no less impacted by the kinds of changes we have described in this book: the shift in the balance of agency, intensely felt local and global diversity and the effects of social web technologies to name just three of the large changes of our times. There is more evaluation, from teacher evaluation, to program evaluation, to evaluations that even determine the fate of educational institutions that may have supported communities for many decades. In the spirit of synergistic feedback, credible evaluations can never again avoid the large questions: What are our underlying agendas? Are our terms of reference limited? Who or what is neglected or excluded? And, in this ‘knowledge society’, there is no excuse for not using the best data collection processes and data analytics, qualitative and/or quantitative.

Dimension 3: Research

Finally, when it comes to research, we need to think holistically as we develop educational knowledge. We may choose to have a quantitative bent, and for good reason, or a qualitative bent, for equally good reason.

However, we need to move beyond these dichotomies, or uneasy compromises, such as ‘mixed methods’, which still accept the old frames of reference in the methods wars. We need a broader view of educational ‘science’. Picking up on the knowledge process approach we outlined in Chapter 7, here is the range of epistemic moves we need to make as researchers, whether we are academic professional researchers in teacher-education institutions or teacher-professional researchers working with learners in schools.

See Lankshear and Knobel on Teacher Research.

|

Experiential |

Analytical |

||

|

Prior evidence • Previous research • Experience and identity |

New empirical work • Quantitative surveys • Qualitative surveys • Observation • Experimentation |

Explanation • Data analysis • Deductive reasoning • Causal analysis |

Critique • Interrogation of perspectives, interests • Interpretation of agendas |

|

Conceptual |

Applied |

||

|

Categories • Clear definitions |

Theory • Connecting the categories • Inductive reasoning |

Pragmatic implications • Direct applicability • Immediate solutions |

Innovation • Generalisability to different settings • Wider applicability |